Packaging and Architecture

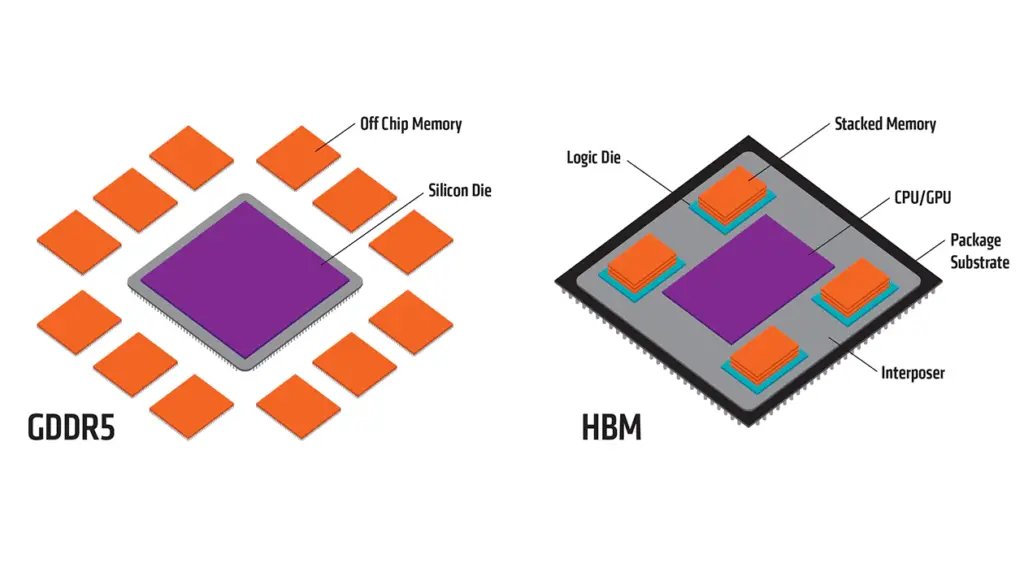

When it comes to DDR (Double Data Rate) and HBM (High-Bandwidth Memory), the differences start with their packaging and architecture. DDR memories are typically off-chip DIMMs (Dual In-line Memory Modules), which means they are separate from the CPU die and are located at a distance away from the CPU on the motherboard. On the other hand, HBM is packaged on top of the CPU die using Through Silicon Via (TSV) interconnects and can be stacked on top of each other. This proximity to the CPU (or GPU) results in a significant reduction in latency and increase in bandwidth.

Bandwidth and Pin Count

One of the main advantages of HBM is its ability to offer a much higher bandwidth compared to DDR-based DIMMs. This is due to the fact that HBM is part of the same package as the processor, allowing for a larger pin count for Command, Address, and Data. This, in turn, enables a larger scale of parallel access of Data, resulting in a wider bus with more channels and higher bandwidth. In contrast, if the pin count of a DIMM were to be increased, the CPU package would also have to increase its pin count, making it unfeasible for signal transmission across the motherboard PCB.

Latency and Throughput

HBM's proximity to the processor die also results in lower latency compared to DDR-based DIMMs. This, combined with its higher bandwidth, provides a higher throughput (combination of bandwidth and latency) compared to DDR-based modules. In applications where latency is critical, HBM is the clear winner. However, when it comes to capacity, DDR memory is still the clear winner, as it can pack in way more capacity even with 8 HBM-based DRAM dies stacked on top of the CPU die.

Protocol, PHY, and IO Design

HBM is a derived version of DDR in terms of protocol, still using dual-edge data strobing. However, it has a wider data bus, requiring more number of DQS strobe generation, in addition to having more number of channels. The logic and electrical PHY and IO design for HBM are different, owing to factors like signal integrity and power issues while transmitting data across TSVs, and the shape of the DRAM die affects the floorplan of these designs.

Applications and Use Cases

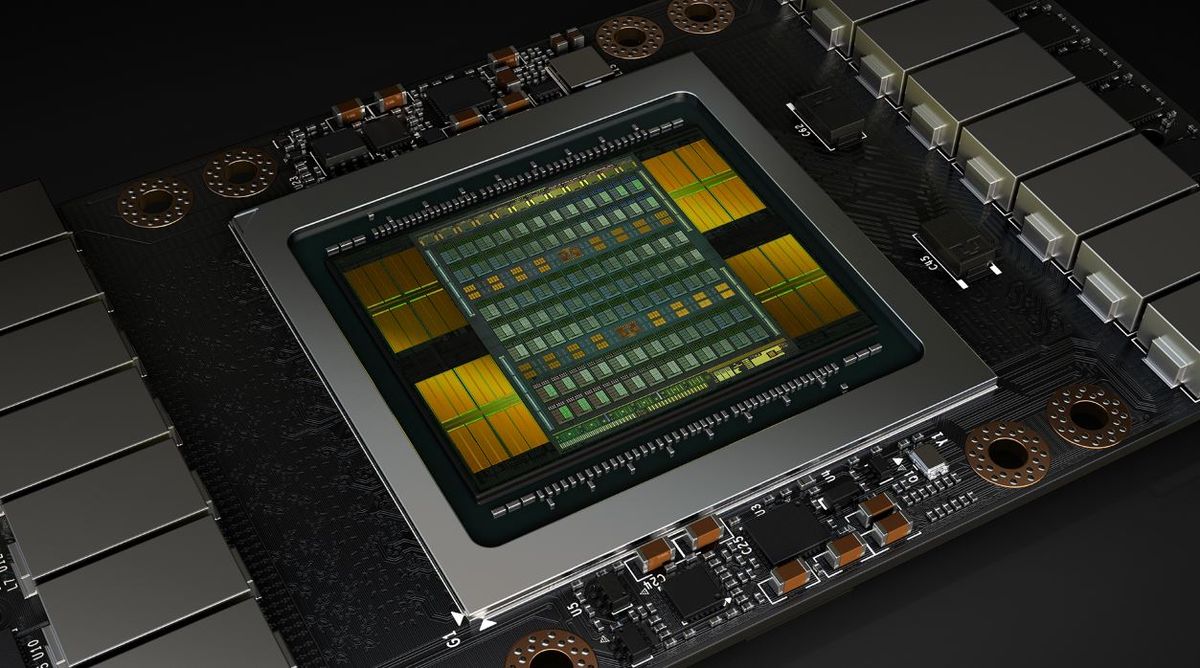

From an application point of view, DDR-based DRAM DIMMs are still used as the main system memory for most client and server systems, while HBM is used for applications that require low latency, high bandwidth, but at the cost of a lower capacity. AMD has been actively using HBM for their discrete GPUs, while some other semiconductor companies use HBM-based DRAMs as a separate local cache for high-end applications in servers (AI, machine learning, etc.), for storing a large amount of data locally (than system memory), thereby giving performance boosts.

Gaming and High-Performance Applications

So, what happens when you run a AAA video game with full physics simulation on a 64 Gbyte RAM gaming computer, and also use HBM memory on the video card? The results are impressive, with HBM providing a significant boost in performance due to its lower latency and higher bandwidth. This is because HBM is able to provide a higher throughput, which is critical in applications where latency is a major bottleneck.

Network Congestion and Latency

In the context of network congestion, latency is not just about propagation delay. When there is competing traffic, the lack of bandwidth results in queuing delay, which can significantly impact performance. This is where HBM's higher bandwidth and lower latency come into play, providing a significant advantage in applications where network congestion is a major concern.

Conclusion

In conclusion, DDR and HBM are two different technologies with different strengths and weaknesses. While DDR is still the clear winner when it comes to capacity, HBM provides a significant advantage in terms of bandwidth and latency, making it the ideal choice for applications that require low latency and high bandwidth. By understanding the differences between DDR and HBM, developers and engineers can make informed decisions about which technology to use in their applications, resulting in better performance and efficiency.